URGENT UPDATE: A groundbreaking new method in federated learning has just been revealed by a research team from Zhejiang University. Their innovative approach, named FedMcon, promises to revolutionize how decentralized AI systems train models while maintaining data privacy.

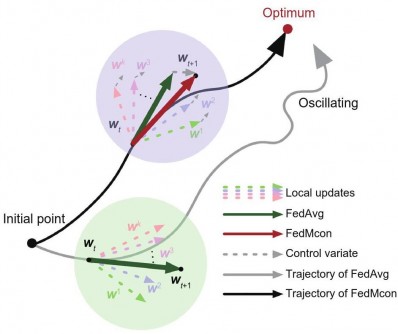

The traditional federated averaging algorithm, known as FedAvg, has struggled to keep pace with the diverse data distributions across different clients. This often results in slow convergence and poor performance. In response to these challenges, the team has introduced FedMcon, an adaptive aggregation method that integrates a learnable controller trained with a small proxy dataset. This allows it to effectively merge local models into a global model that is finely tuned to specific objectives.

Experimental results show that FedMcon can achieve a staggering communication speedup of up to 19 times in federated learning scenarios. The research was conducted on three well-known datasets—MovieLens 1M, FEMNIST, and CIFAR-10—across various settings, including cross-silo and cross-device environments. The findings indicate that FedMcon consistently outperforms existing methods in critical performance metrics such as AUC, HR, NDCG, and top-1 accuracy, while also demonstrating superior convergence speed.

The paper, entitled “FedMcon: an adaptive aggregation method for federated learning via meta controller“, is co-authored by prominent researchers Tao Shen, Zexi Li, Ziyu Zhao, Didi Zhu, Zheqi LV, Kun Kuang, Shengyu Zhang, Chao Wu, and Fei Wu. The full text is available as open access at https://doi.org/10.1631/FITEE.2400530.

As AI continues to evolve, the implications of FedMcon could extend far beyond academic research, potentially transforming industries that rely on secure, efficient machine learning applications. This could include sectors like healthcare, finance, and autonomous vehicles, where data privacy is paramount.

Stay tuned for more updates on this developing story as the research community begins to explore the wide-ranging applications of FedMcon. This could be a pivotal moment for federated learning, making it not only faster but also more robust and applicable across diverse scenarios.